Will Superintelligent Machines Destroy Humanity?

September 13th, 2014My money would be on nukes.

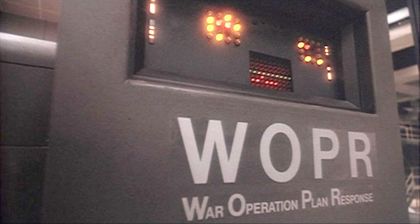

How about, superintelligent machines somehow in control of nukes?

Via: Reason:

In Frank Herbert’s Dune books, humanity has long banned the creation of “thinking machines.” Ten thousand years earlier, their ancestors destroyed all such computers in a movement called the Butlerian Jihad, because they felt the machines controlled them. Human computers called Mentats serve as a substitute for the outlawed technology. The penalty for violating the Orange Catholic Bible’s commandment “Thou shalt not make a machine in the likeness of a human mind” was immediate death.

Should humanity sanction the creation of intelligent machines? That’s the pressing issue at the heart of the Oxford philosopher Nick Bostrom’s fascinating new book, Superintelligence. Bostrom cogently argues that the prospect of superintelligent machines is “the most important and most daunting challenge humanity has ever faced.” If we fail to meet this challenge, he concludes, malevolent or indifferent artificial intelligence (AI) will likely destroy us all.

Book: Superintelligence: Paths, Dangers, Strategies by Nick Bostrom